Cordanetes: Combining Corda and Kubernetes

December 11, 2019

Kubernetes is a powerful container orchestration and resource management tool. Corda is an open-source blockchain project, designed specifically for business.

If you’re reading this you might have asked the question–can they work together? The short answer is yes, but it needs more care and consideration than a typical application. The Corda platform is stateful and network-dependent and needs more adjusting than the stateless, worker services you often see in typical Kubernetes deployments. However, there are plenty of ways that Corda deployments can benefit from Kubernetes.

When it comes to running containers in production, you can quickly end up with a high number of containers over time. Maintaining control of the operation, deployment, upgrading, and health of these containers would require a dedicated team. In a similar fashion, if you were managing thousands of Corda nodes, you would certainly need the right people and tools to get it right. Given the success of Kubernetes in achieving a fairly similar feat, there’s certainly a thing or two you can learn from it.

There are several important factors to consider when including Kubernetes in a Corda deployment:

Horizontal Scaling vs Vertical Scaling

One of the most well-known features of Kubernetes is Pod-level horizontal scaling. This means that when your services receive more traffic, more instances will be generated, across more machines growing or shrinking on demand. Currently, Corda supports vertical scaling: Corda scales by adding more power to a single machine rather than spreading the load across multiple machines. As described here, there are long-term plans for horizontal scaling involving the splitting out Corda’s flow worker components into separate JVM threads and increasing the number of threads in response to higher demand.

However, because of the high throughput capability of Corda today, as shown in both internal and external analyses, few use-cases require the throughput necessitating additional horizontal scaling.

Although Corda doesn’t currently support horizontal scaling there are still powerful features of Kubernetes that you may benefit from in your Corda operation

Auto-vertical Scaling

If a node reaches the full capacity of the machine on which it runs, the node must migrate to a higher-spec machine.

This process can be time-consuming, even when automated, is laborious at scale, and error-prone.

Kubernetes supports auto-vertical scaling on the Pod level. This means your cluster could be configured to scale the machine on which a Pod runs up or down to more accurately fit the anticipated power needs of your Corda service. For example, if certain subsets of nodes saw spikes in traffic at key times, with the right usage analysis, you could potentially reschedule across machines in order gain cost-savings and optimise performance. That may be overkill for day-to-day core network service operations, like your notary, but could benefit a cluster of many nodes.

This sounds great theoretically but experimentation is required to get a feel of timelines for self-healing, connectivity assurances, ensuring previous forms of the node process have ceased among other measures. Please do share your findings with us all.

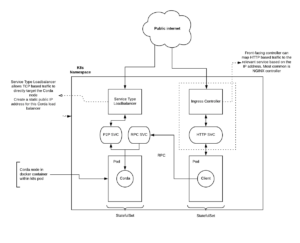

Connectivity

Corda mandates that every node has an IP address to be uniquely discoverable on a network map server. An IP load balancing service can be deployed to ensure that the Corda nodes are accessible from both the public internet and within the cluster. All traffic directed to this IP address will be forwarded to your service, on the specified port.

The defaults vary across cloud providers but the majority support standard TCP & HTTP connections. By nature of Corda being stateful, you will likely require a load balancer service for each node deployment, along with separate services for RPC as illustrated below.

Ingress controllers, a special type of load balancer, often default to an NGINX HTTP based service that can be used for a Corda client.

Secrets and Config Management

The Corda node is much more than just the Corda jar file. It must be paired with persistence, configuration, applications and certificates. It goes without saying, the more protected this information is the better.

The Corda Obfuscator may pose challenges with Kubernetes, a higher-than-VM-level cluster management, since it uses the machine’s MAC address to decrypt information required by the node, which is problematic when k8s Pods can spawn on any random machine in a cluster, all of which will obviously have different MAC addresses.

Having said that, it it possible to take advantage of the additional security of Kubernetes by encoding and storing the node.conf and certificates folders on a Pod or container using the secrets feature. There are still some risks with this approach though: if you configure the secret through a manifest (JSON or YAML) file which has the secret data encoded as base64. Base64 encoding is not an encryption method and is often considered the same as plain text. Someone who gains access to the config maps could possibly then decode the secrets object representation. Be careful not to log, check-in or send this stuff to anyone.

Health

Kubernetes offers features to check the status and readiness of your services. These are configured in your deployment YAML to subscribe to the port that your Pods containers are running. You can specify what to do when services don’t start, perform as normal, or exit unexpectedly. Corda doesn’t have special support for this yet, but you can subscribe via TCP sockets for now.

Architectures

Considering the above, below are some architectures you could adopt.

1. Corda + RPC client

When creating Enterprise-grade systems with Corda, you’ll often end up pairing Corda with an RPC client. This grants you a way to define and expose APIs in accordance with your existing business logic, in the ways familiar to you. In order to ensure a better separation of concerns, we preferably want Corda and its client running in different Pods. This isolates the Corda service from client failure and vice versa. Ideally, we do not want Corda’s RPC port exposed to the public so look to create a separate RPC service for the client to consume.

There is no direct Pod to Pod communication so to allow the client to connect to the Corda node via RPC you should connect the Corda Pod to another service.

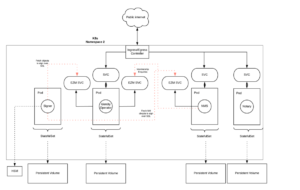

2. Corda Enterprise Network Manager

It is entirely possible to deploy your Corda network services on Kubernetes. A major advantage of Kubernetes here would likely be preventing out-of-memory resource downtime via dynamic monitoring.

If you haven’t heard about Corda Enterprise Network Manager now is a great chance to school yourself on an epic technology. I appreciate Corda Enterprise Network Manager on Kubernetes probably warrants a separate blog post too.

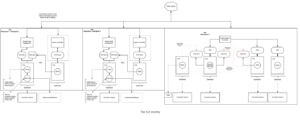

The main things here are that some components, namely the signing service (CA), are high security so must be isolated from the internet. Furthemore each component must be accessible to other core network components. At the moment, when configured correctly, they communicate over SSL so you should deploy separate services to enable those comms more safely. HTTP based services can be exposed via an ingress controller as usual. Here’s what an architecture might look like:

3. All together with monitoring

Putting all those components together, you could arrive at a pretty nifty deployment. Some might argue it is a better separation of concerns to house the CENM core components and participant services in different namespaces.

Cloud platforms

It makes sense and is likely necessary to take advantage of cloud native support for Kubernetes. I am talking about the Azure AKS’, Amazon EKS’ and OpenShifts of the world. When using most modern releases of these things services you should encounter fewer issues, with some older versions of these platforms there are nitty-gritty details that can make Corda deployment more challenging. For example, from my experience:

- The maximum number of publicly exposed ports allowed per Pod on older versions on OCP is 1; bad news for a service that requires RPC, P2P and SSH.

- Older versions of OCP on AWS cannot expose non-HTTP traffic to Pods, without the use of special cloud plugins that might hinder support for production workloads.

- Some deployments only allow a maximum 20x ELB per AWS region. Not a major blocker, but a blocker nonetheless. This could probably be resolved by working directly with the cloud provider.

The key take away being that the devil is in the details. Not all of you will have the luxury of deploying a fresh cluster for your Corda deployment. My bet is a large portion will be wedging off a corner of existing clusters. Regardless, make sure to work with your container platform experts to ensure Corda’s needs are met.

Conclusion: Should I use it?

While Corda is, for a distributed system, relatively easy to deploy, Kubernetes has a notoriously steep learning curve. If you don’t have a team that’s willing to address the challenges that Kubernetes presents and experiment to overcome them, running Corda on Kubernetes may prove difficult.

If you plan on running a node-as-a-service, running many nodes on behalf of otther entities then Kubernetes may help you manage and drive down costs compared to a manually scripted deployment. Don’t forget if you’re a single node operator there’s no reason why Systemd with surrounding tooling can’t fulfil most of your needs.

Corda’s Lead Platform Engineer Mike Hearn has also written useful things to look out for here when using Corda and Kubernetes.

R3 is expanding rapidly — If you want to be a part of making blockchain real, check out this post from R3’s Head of Engineering!